This page is no longer being updated.

The leaderboards have not been updated for a while, and will not be udpated. Please consult recent publications for the state of the art. The hidden test sets have been publicly released.

Cornell Natural Language for Visual Reasoning

The Natural Language for Visual Reasoning corpora are two language grounding datasets containing natural language sentences grounded in images. The task is to determine whether a sentence is true about a visual input. The data was collected through crowdsourcings, and solving the task requires reasoning about sets of objects, comparisons, and spatial relations. This includes two corpora: NLVR, with synthetically generated images, and NLVR2, which includes natural photographs.

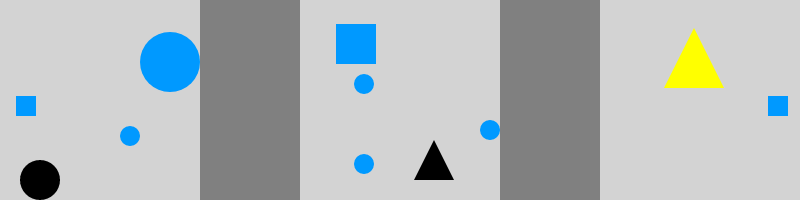

Natural Language for Visual Reasoning for Real

NLVR2 contains 107,292 examples of human-written English sentences grounded in pairs of photographs. NLVR2 retains the linguistic diversity of NLVR, while including much more visually complex images.

true

false

We only publicly release the sentence annotations and original image URLs, and scripts that download the images from the URLs. If you would like direct access to the images, please fill out this Google Form. This form asks for your basic information and asks you to agree to our Terms of Service.

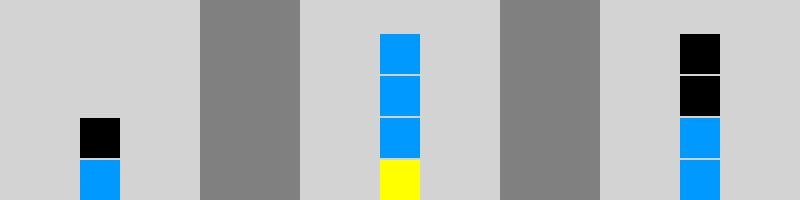

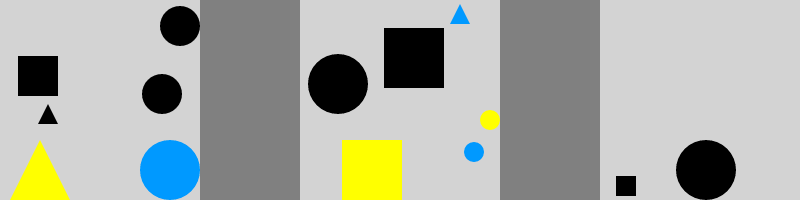

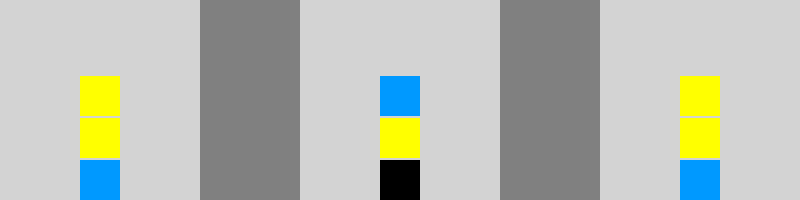

Natural Language for Visual Reasoning

NLVR contains 92,244 pairs of human-written English sentences grounded in synthetic images. Because the images are synthically generated, this dataset can be used for semantic parsing.

true

true

false

More examples (from the development set) are available here.

Leaderboards

Update: as of August 18, 2022, both the NLVR and NLVR2 hidden test sets are released to the public, and we will no longer be taking requests to run on the hidden test set. For NLVR, all of the data is available on Github. For NLVR2, only sentences, labels, and image URLs are available on Github. If you would like direct access to the images, please fill out the Google Form.

Questions?

Please visit our Github issues page or email us at

To keep up to date with major changes, please subscribe:

Acknowledgments

This research was supported by the NSF (CRII-1656998), a Facebook ParlAI Research Award, an AI2 Key Scientific Challenges Award, Amazon Cloud Credits Grant, and support from Women in Technology New York. This material is based on work supported by the National Science Foundation Graduate Research Fellowship under Grant No. DGE-1650441. We thank Mark Yatskar and Noah Snavely for their comments and suggestions, and the workers who participated in our data collection for their contributions.

Also thanks to SQuAD for allowing us to use their code to create this website!

NLVR2 Leaderboard

NLVR2 presents the task of determining whether a natural language sentence is true about a pair of photographs. As of August 18, 2022, the NLVR2 hidden test set is released to the public, and we will no longer be taking requests to run on the hidden test set. You can find the sentences, labels, and image URLs for the hidden test set in the Github repository. If you would like direct access to the images, please fill out the Google Form.

| Rank | Model | Dev. (Acc) | Test-P (Acc) | Test-U (Acc) | Test-U (Cons) |

|---|---|---|---|---|---|

| Human Performance Cornell University (Suhr et al. 2019) |

96.2 | 96.3 | 96.1 | - | |

1 Oct 14, 2019 |

UNITER Microsoft Dynamics 365 AI Research (Chen et al. 2019) |

78.4 | 79.5 | 80.4 | 50.8 |

2 Aug 20, 2019 |

LXMERT UNC Chapel Hill (Tan and Bansal 2019) |

74.9 | 74.5 | 76.2 | 42.1 |

3 Aug 11, 2019 |

VisualBERT UCLA & AI2 & PKU (Li et al. 2019) |

67.4 | 67.0 | 67.3 | 26.9 |

| 4 Nov 1, 2018 |

MaxEnt Cornell University (Suhr et al. 2019) |

54.1 | 54.8 | 53.5 | 12.0 |

| 5 Nov 1, 2018 |

CNN+RNN Cornell University (Suhr et al. 2019) |

53.4 | 52.4 | 53.2 | 11.2 |

| 6 Nov 1, 2018 |

FiLM MILA, ran by Cornell University (Perez et al. 2018) |

51.0 | 52.1 | 53.0 | 10.6 |

| 7 Nov 1, 2018 |

Image Only (CNN) Cornell University (Suhr et al. 2019) |

51.6 | 51.9 | 51.9 | 7.1 |

| 8 Nov 1, 2018 |

N2NMN, policy search from scratch UC Berkeley, ran by Cornell University (Hu et al. 2017) |

51.0 | 51.1 | 51.5 | 5.0 |

| 9 Nov 1, 2018 |

Majority Class Cornell University (Suhr et al. 2019) |

50.9 | 51.1 | 51.4 | 4.6 |

| 10 Nov 1, 2018 |

Text Only (RNN) Cornell University (Suhr et al. 2019) |

50.9 | 51.1 | 51.4 | 4.6 |

| 11 Nov 1, 2018 |

MAC-Network Stanford University, ran by Cornell University (Hudson and Manning 2018) |

50.8 | 51.4 | 51.2 | 11.2 |

NLVR Leaderboard

NLVR presents the task of determining whether a natural language sentence is true about a synthetically generated image. We divide results into whether they process the image pixels directly (Images) or whether they use the structured representations of the images (Structured Representations). As of July 29, 2022, the NLVR hidden test set (Test-U) is released to the public, and we will no longer be taking requests to run on the hidden test set. You can find the data, including sentences, labels, and images, in the Github repository.

Images

| Rank | Model | Dev. (Acc) | Test-P (Acc) | Test-U (Acc) | Test-U (Cons) |

|---|---|---|---|---|---|

| Human Performance Cornell University |

94.6 | 95.4 | 94.9 | - | |

| 1 Apr 20, 2018 |

CNN-BiATT UNC Chapel Hill (Tan and Bansal 2018) |

66.9 | 69.7 | 66.1 | 28.9 |

| 2 Nov 1, 2018 |

N2NMN, policy search from scratch UC Berkeley, ran by Cornell University (Hu et al. 2017) |

65.3 | 69.1 | 66.0 | 17.7 |

| 3 Apr 22, 2017 |

Neural Module Networks UC Berkeley, ran by Cornell University (Andreas et al. 2016) |

63.1 | 66.1 | 62.0 | - |

| 4 Nov 1, 2018 |

FiLM MILA, ran by Cornell University (Perez et al. 2018) |

60.1 | 62.2 | 61.2 | 18.1 |

| 5 Apr 22, 2017 |

Majority Class Cornell University (Suhr et al. 2017) |

55.3 | 56.2 | 55.4 | - |

| 6 Nov 1, 2018 |

MAC-Network Stanford University, ran by Cornell University (Hudson and Manning 2018) |

55.4 | 57.6 | 54.3 | 8.6 |

| Unranked Sept 7, 2018 |

CMM Chinese Academy of Sciences (Yao et al. 2018) |

68.0 | 69.9 | - | - |

| Unranked May 23, 2018 |

W-MemNN Federico Santa María Technical University & Pontífica Universidad Católica de Valparaíso (Pavez et al. 2018) |

65.6 | 65.8 | - | - |

Structured Representations

| Rank | Model | Dev. (Acc) | Test-P (Acc) | Test-U (Acc) | Test-U (Cons) |

|---|---|---|---|---|---|

| Human Performance Cornell University |

94.6 | 95.4 | 94.9 | - | |

1 July 14, 2021 |

Consistency-based Parser UPenn; UC Irvine; AI2 (Gupta et al. 2021) |

89.6 | 86.3 | 89.5 | 74.0 |

| 2 June 2, 2019 |

Iterative Search AI2; Mila; UW; CMU (Dasigi et al. 2019) |

85.4 | 82.4 | 82.9 | 64.3 |

| 3 Nov 14, 2017 |

AbsTAU Tel-Aviv University (Goldman et al. 2018) |

85.7 | 84.0 | 82.5 | 63.9 |

| 4 Apr 4, 2018 |

BiATT-Pointer UNC Chapel Hill (Tan and Bansal 2018) |

74.6 | 73.9 | 71.8 | 37.2 |

| 5 Apr 22, 2017 |

MaxEnt Cornell University (Suhr et al. 2017) |

68.0 | 67.7 | 67.8 | - |

| 6 Apr 22, 2017 |

Majority Class Cornell University (Suhr et al. 2017) |

55.3 | 56.2 | 55.4 | - |